Machine-Learning System Exploration Tools#

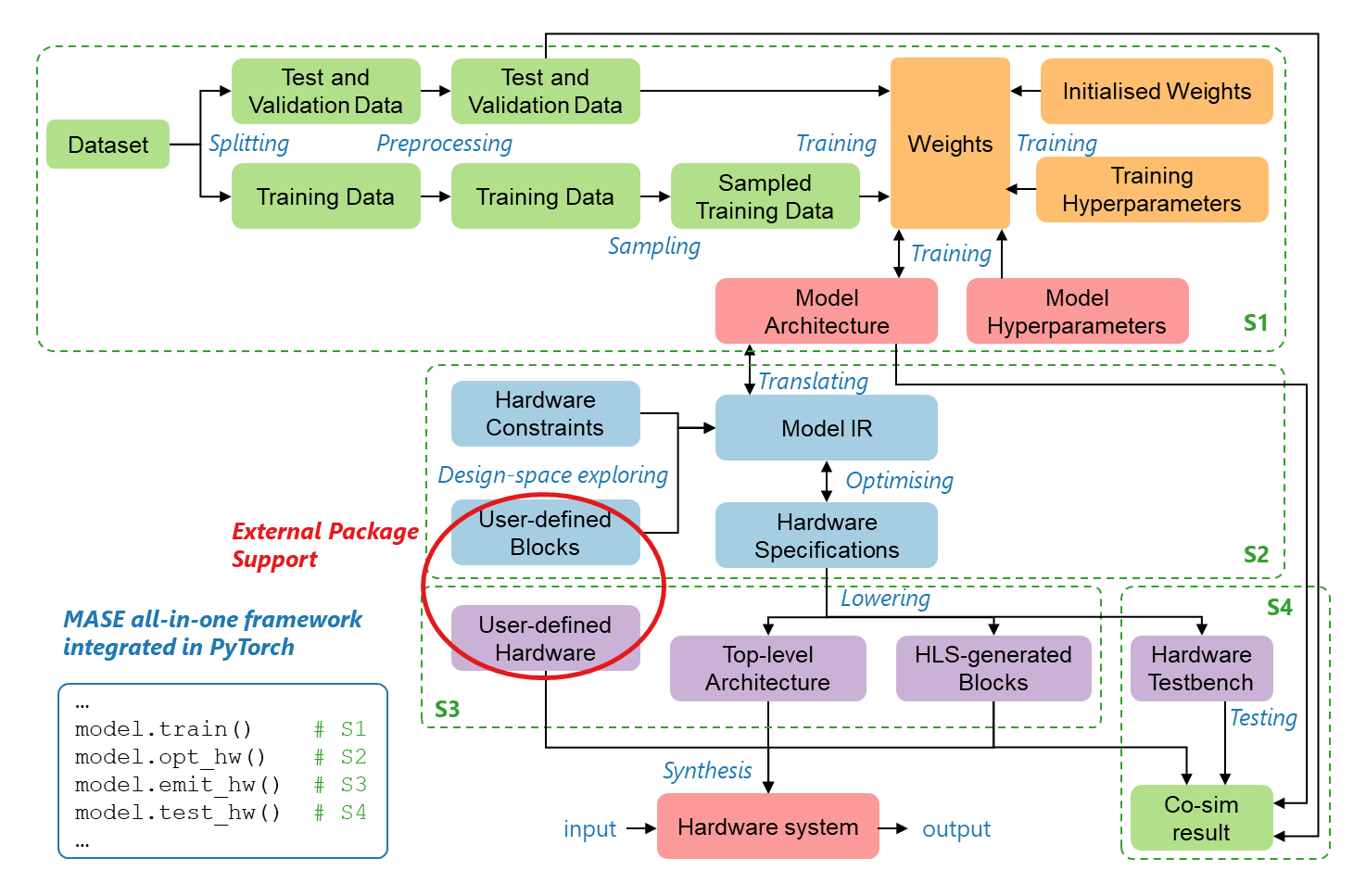

Mase is a Machine Learning compiler based on PyTorch FX, maintained by researchers at Imperial College London. We provide a set of tools for inference and training optimization of state-of-the-art language and vision models. The following features are supported, among others:

Quantization Search: mixed-precision quantization of any PyTorch model. We support microscaling and other numerical formats, at various granularities.

Quantization-Aware Training (QAT): finetuning quantized models to minimize accuracy loss.

Distributed Deployment: Automatic parallelization of models across distributed GPU clusters, based on the Alpa algorithm.

For more details, refer to the Tutorials. If you enjoy using the framework, you can support us by starring the repository on GitHub!

Efficient AI Optimization#

MASE provides a set of composable tools for optimizing AI models. The tools are designed to be modular and can be used in a variety of ways to optimize models for different targets. The tools can be used to optimize models for inference, training, or both. The tools can be used to optimize models for a variety of targets, including CPUs and GPUs. The tools can be used to optimize models for a variety of applications, including computer vision, natural language processing, and speech recognition.

Documentation#

For more details, explore the documentation

Machop API

Advanced Deep Learning Systems